-

Loki日志全链路实战:从收集到告警的完整指南

- 引言:为什么选择Loki?

传统日志系统的痛点

- 资源消耗高:ELK(Elasticsearch)需要为全文检索建立复杂索引,存储成本飙升,官方数据是通常可以将数据压缩20%~30%,实测下来在某些场景下可以达到50%,使用DEFLATE压缩算法,最低可以降低超过70%

- 云原生适配差:Kubernetes动态环境下的日志标签管理困难

Loki的核心优势

- 轻量级设计:仅索引元数据(标签),有近10倍的压缩比,5G数据输入存储实际增长5G。

- 无缝集成:原生支持Prometheus生态,原生集成grafana,支持集群本身监控告警和日志监控告警。

- 动态标签:完美适配Kubernetes Pod/Node等动态资源标签

- Loki架构解析

核心组件与数据流

Loki则采用了分布式架构,将日志数据存储在多个节点上,Promtail进行日志收集,可视化依赖于Grafana。

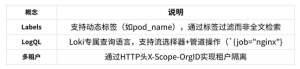

关键概念解析

点击图片可查看完整电子表格

- 部署

环境准备

- 硬件建议:

- 生产环境:至少3节点集群(每个节点4核8GB+)

- 存储:优先选择SSD + S3兼容存储(MinIO)

- 版本选择:

SQL

推荐使用v2.8+版本

helm repo add grafana https://grafana.github.io/helm-charts

helm repo updateKubernetes集群部署

SQL

使用Helm部署Loki Stack(包含Promtail)

helm upgrade –install loki grafana/loki-stack \

–set promtail.enabled=true \

–set loki.persistence.enabled=true \

–set loki.persistence.size=100GiPromtail配置示例

SQL

#promtail-config.yaml

server:

http_listen_port: 9080positions:

filename: /tmp/positions.yamlclients:

url: http://loki:3100/loki/api/v1/pushscrape_configs:

job_name: kubernetes

kubernetes_sd_configs:

role: pod

pipeline_stages:

docker: {}

relabel_configs:

source_labels: [__meta_kubernetes_pod_label_app]

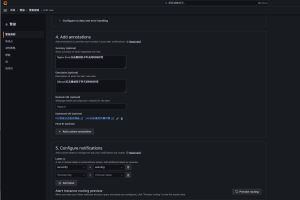

target_label: app- 告警集成

grafana告警规则配置

- 性能优化与分析实践

存储优化技巧

SQL

#loki-config.yaml 优化片段

schema_config:

configs:

– from: 2023-01-01

store: boltdb-shipper

object_store: s3

schema: v11

index:

prefix: index_

period: 24hchunk_store_config:

max_look_back_period: 720h # 日志保留30天日志查询和分析

- 首先,指定 查询文件 filename=”/usr/local/nginx/logs/loki-error.log”

- 然后,通过json的方式

- 最后,设置条件选择,比如status=500、server_name=com

查询加速策略

- 预过滤标签

SQL

#先通过精确标签缩小范围

{cluster=”aws”, pod=~”frontend-.+”} |= “timeout”- 避免全文本扫描:优先使用|=(包含)而非|~(正则)

- 真实场景案例

案例1:nginx5xx日志收集

重新编译nginx

SQL

#上传安装包

nginx-1.25.3.tar.gz、ngx_http_geoip2_module-3.4.tar.gz、libmaxminddb-1.9.1.tar.gz、GeoLite2-City.mmdb、GeoLite2-Country.mmdb#nginx重新编译

./configure –prefix=/usr/local/nginx –with-compat –with-file-aio –with-threads –with-http_addition_module –with-http_auth_request_module –with-http_dav_module –with-http_flv_module –with-http_gunzip_module –with-http_gzip_static_module –with-http_mp4_module –with-http_random_index_module –with-http_realip_module –with-http_secure_link_module –with-http_slice_module –with-http_ssl_module –with-http_stub_status_module –with-http_sub_module –with-http_v2_module –with-mail –with-mail_ssl_module –with-stream –with-stream_realip_module –with-stream_ssl_module –with-stream_ssl_preread_module –with-cc-opt=’-O2 -g -pipe -Wall -Wp,-D_FORTIFY_SOURCE=2 -fexceptions -fstack-protector-strong –param=ssp-buffer-size=4 -grecord-gcc-switches -m64 -mtune=generic -fPIC’ –with-ld-opt=’-Wl,-z,relro -Wl,-z,now -pie’ –add-dynamic-module=/root/nginx/ngx_http_geoip2_module-3.4修改日志格式

SQL

load_module modules/ngx_http_geoip2_module.so;

#修改日志格式

log_format json_analytics escape=json ‘{‘

‘”msec”: “$msec”, ‘

‘”connection”: “$connection”, ‘

‘”connection_requests”: “$connection_requests”, ‘

‘”http_referer”: “$http_referer”, ‘

‘”http_user_agent”: “$http_user_agent”, ‘

‘”request_method”: “$request_method”, ‘

‘”server_protocol”: “$server_protocol”, ‘

‘”status”: “$status”, ‘

‘”pipe”: “$pipe”, ‘

‘”server_name”: “$server_name”, ‘

‘”request_time”: “$request_time”, ‘

‘”gzip_ratio”: “$gzip_ratio”, ‘

‘”http_cf_ray”: “$http_cf_ray”,’

‘”geoip_country_code”: “$geoip2_data_country_code”,’

‘”geoip2_city_name_en”: “$geoip2_city_name_en”, ‘

‘”geoip2_city_name_cn”: “$geoip2_city_name_cn”, ‘

‘”geoip2_longitude”: “$geoip2_longitude”, ‘

‘”geoip2_latitude”: “$geoip2_latitude”‘

‘}’;

map $http_x_forwarded_for $real_ip {

default “”;

“~^(?P<ip>\d+\.\d+\.\d+\.\d+)” $ip;

“~^(?P<ip>\d+\.\d+\.\d+\.\d+),.*” $ip;

}

#指定日志的位置,会放在nginx目录下

map $status $log_path {

~^4 /logs/loki-error.log;

~^5 /logs/loki-error.log;

default /logs/access.log;

}

geoip2 /usr/local/nginx/modules/GeoLite2-Country.mmdb {

auto_reload 5m;

$geoip2_metadata_country_build metadata build_epoch;

$geoip2_data_country_code source=$real_ip country iso_code;

$geoip2_data_country_name country names zh-CN;

}geoip2 /usr/local/nginx/modules/GeoLite2-City.mmdb {

$geoip2_city_name_en source=$real_ip city names en;

$geoip2_city_name_cn source=$real_ip city names zh-CN;

$geoip2_longitude source=$real_ip location longitude ;

$geoip2_latitude source=$real_ip location latitude ;

}

fastcgi_param COUNTRY_CODE $geoip2_data_country_code;

fastcgi_param COUNTRY_NAME $geoip2_data_country_name;

fastcgi_param CITY_NAME $geoip2_city_name_cn; check interval=3000 rise=2 fall=5 timeout=1000 type=tcp;

check_http_send “HEAD /health/check/status HTTP/1.0\r\n\r\n”;

check_http_expect_alive http_2xx http_3xx;配置promtail收集

SQL

cat << EOF >> /data/promtail/config.yaml

server:

http_listen_port: 9080

grpc_listen_port: 0positions:

filename: /tmp/positions.yamlclients:

url: http://192.168.119.191:3100/loki/api/v1/pushscrape_configs:

job_name: nginx

static_configs:

targets:

localhost

labels:

job: nginx

host: $host

agent: promtail

path : /usr/local/nginx/logs/loki-error.log

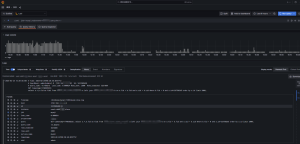

EOF结合grafana呈现效果

案例2:数据库慢查询收集

配置promtail收集

SQL

cat config.yaml

server:

http_listen_port: 9080

grpc_listen_port: 0

positions:

filename: /tmp/positions.yaml

clients:

url: http://192.168.119.191:3100/loki/api/v1/push

scrape_configs:

– job_name: mysql-slowlogs

static_configs:

– labels:

instance: usa4-rds03-slave

projectname: chat

job: mysql

__path__: /database/mysql/1266/mysql-slow.log

pipeline_stages:

– multiline:

firstline: ‘#\sTime:.‘

– regex:

* expression: ‘#\s*Time:\s*(?P<timestamp>.*)\n#\s*User@Host:\s*(?P<user>[^\[]+).*@\s*(?P<host>.*]).***Id:\s***(?P<id>\d+)\n#\s*Query_time:\s*(?P<query_time>\d+\.\d+)\s*Lock_time:\s*(?P<lock_time>\d+\.\d+)\s*Rows_sent:\s*(?P<rows_sent>\d+)\s*Rows_examined:\s*(?P<rows_examined>\d+)\n(?P<query>(?s:.*))$’

– labels:

timestamp:

user:

host:

id:

query_time:

lock_time:

rows_sent:

rows_examined:

query:

– drop:

expression: “^ *$”

drop_counter_reason: “drop empty lines”结合grafana呈现效果

- 总结与展望

总体而言,Loki相对轻量级,具有较高的可扩展性和简化的存储架构,但需要额外的组件,Loki使用的是自己的查询语言LokiQL,有一定的学习曲线。若是数据量适中,数据属于时序类,如应用程序日志和基础设施指标,并且应用使用kubernetes Pod形式部署,则选择Loki比较合适。

Loki正在成为云原生时代日志管理的标准解决方案,其与以下技术的整合值得关注:

- OpenTelemetry:统一日志、指标、追踪的采集标准

- WAL(Write-Ahead Logging):提升高吞吐场景下的可靠性

- 边缘计算:轻量级特性适合IoT设备日志收集

附录

参考文章:

云和安全管理服务专家